A clustered, transactional cache

Release 2.0.0 Habanero

Copyright © 2004, 2005, 2006, 2007 JBoss, a division of Red Hat Inc.

June 2007

Table of Contents

- Preface

- I. Introduction to JBoss Cache

- 1. Overview

- 2. User API

- 3. Configuration

- 4. Deploying JBoss Cache

- 4.1. Standalone Use / Programatic Deployment

- 4.2. JMX-Based Deployment in JBoss AS (JBoss AS 5.x and 4.x)

- 4.3. Via JBoss Microcontainer (JBoss AS 5.x)

- 4.4. Binding to JNDI in JBoss AS

- 4.5. Runtime Management Information

- 5. Version Compatibility and Interoperability

- II. JBoss Cache Architecture

- 6. Architecture

- 7. Clustering

- 8. Cache Loaders

- 9. Eviction Policies

- 10. Transactions and Concurrency

- III. JBoss Cache References

This is the official JBoss Cache user guide. Along with its accompanying documents (an FAQ, a tutorial and a whole set of documents on PojoCache), this is freely available on the JBoss Cache documentation site.

When used, JBoss Cache refers to JBoss Cache Core, a tree-structured, clustered, transactional cache. Pojo Cache, also a part of the JBoss Cache distribution, is documented separately. (Pojo Cache is a cache that deals with Plain Old Java Objects, complete with object relationships, with the ability to cluster such pojos while maintaining their relationships. Please see the Pojo Cache documentation for more information about this.)

This book is targeted at both developers wishing to use JBoss Cache as a clustering and caching library in their codebase, as well as people who wish to "OEM" JBoss Cache by building on and extending its features. As such, this book is split into two major sections - one detailing the "User" API and the other going much deeper into specialist topics and the JBoss Cache architecture.

In general, a good knowledge of the Java programming language along with a strong appreciation and understanding of transactions and concurrent threads is necessary. No prior knowledge of JBoss Application Server is expected or required.

For further discussion, use the user forum linked on the JBoss Cache website. We also provide a mechanism for tracking bug reports and feature requests on the JBoss Cache JIRA issue tracker. If you are interested in the development of JBoss Cache or in translating this documentation into other languages, we'd love to hear from you. Please post a message on the user forum or contact us by using the JBoss Cache developer mailing list.

This book is specifically targeted at the JBoss Cache release of the same version number. It may not apply to older or newer releases of JBoss Cache. It is important that you use the documentation appropriate to the version of JBoss Cache you intend to use.

This section covers what developers would need to quickly start using JBoss Cache in their projects. It covers an overview of the concepts and API, configuration and deployment information.

JBoss Cache is a tree-structured, clustered, transactional cache. It is the backbone for many fundamental JBoss Application Server clustering services, including - in certain versions - clustering JNDI, HTTP and EJB sessions.

JBoss Cache can also be used as a standalone transactional and clustered caching library or even an object oriented data store. It can even be embedded in other enterprise Java frameworks and application servers such as BEA WebLogic or IBM WebSphere, Tomcat, Spring, Hibernate, and many others. It is also very commonly used directly by standalone Java applications that do not run from within an application server, to maintain clustered state.

Pojo Cache is an extension of the core JBoss Cache API. Pojo Cache offers additional functionality such as:

- maintaining object references even after replication or persistence.

- fine grained replication, where only modified object fields are replicated.

- "API-less" clustering model where pojos are simply annotated as being clustered.

Pojo Cache has a complete and separate set of documentation, including a user guide, FAQ and tutorial and as such, Pojo Cache is not discussed further in this book.

JBoss Cache offers a simple and straightforward API, where data (simple Java objects) can be placed in the cache and, based on configuration options selected, this data may be one or all of:

- replicated to some or all cache instances in a cluster.

- persisted to disk and/or a remote cluster ("far-cache").

- garbage collected from memory when memory runs low, and passivated to disk so state isn't lost.

In addition, JBoss Cache offers a rich set of enterprise-class features:

- being able to participate in JTA transactions (works with Java EE compliant TransactionManagers).

- attach to JMX servers and provide runtime statistics on the state of the cache.

- allow client code to attach listeners and receive notifications on cache events.

A cache is organised as a tree, with a single root. Each node in the tree essentially contains a Map, which acts as a store for key/value pairs. The only requirement placed on objects that are cached is that they implement java.io.Serializable . Note that this requirement does not exist for Pojo Cache.

JBoss Cache can be either local or replicated. Local trees exist only inside the JVM in which they are created, whereas replicated trees propagate any changes to some or all other trees in the same cluster. A cluster may span different hosts on a network or just different JVMs on a single host.

When a change is made to an object in the cache and that change is done in the context of a transaction, the replication of changes is deferred until the transaction commits successfully. All modifications are kept in a list associated with the transaction for the caller. When the transaction commits, we replicate the changes. Otherwise, (on a rollback) we simply undo the changes locally resulting in zero network traffic and overhead. For example, if a caller makes 100 modifications and then rolls back the transaction, we will not replicate anything, resulting in no network traffic.

If a caller has no transaction associated with it (and isolation level is not NONE - more about this later), we will replicate right after each modification, e.g. in the above case we would send 100 messages, plus an additional message for the rollback. In this sense, running without a transaction can be thought of as analogous as running with auto-commit switched on in JDBC terminology, where each operation is committed automatically.

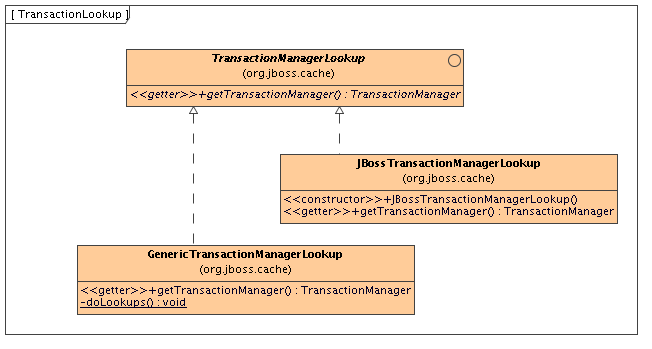

JBoss Cache works out of the box with most popular transaction managers, and even provides an API where custom transaction manager lookups can be written.

The cache is also completely thread-safe. It uses a pessimistic locking scheme for nodes in the tree by default, with an optimistic locking scheme as a configurable option. With pessimistic locking, the degree of concurrency can be tuned using a number of isolation levels, corresponding to database-style transaction isolation levels, i.e., SERIALIZABLE, REPEATABLE_READ, READ_COMMITTED, READ_UNCOMMITTED and NONE. Concurrency, locking and isolation levels will be discussed later.

JBoss Cache requires Java 5.0 (or newer).

However, there is a way to build JBoss Cache as a Java 1.4.x compatible binary using JBossRetro to retroweave the Java 5.0 binaries. However, Red Hat Inc. does not offer professional support around the retroweaved binary at this time and the Java 1.4.x compatible binary is not in the binary distribution. See this wiki page for details on building the retroweaved binary for yourself.

In addition to Java 5.0, at a minimum, JBoss Cache has dependencies on JGroups , and Apache's commons-logging . JBoss Cache ships with all dependent libraries necessary to run out of the box.

JBoss Cache is an open source product, using the business and OEM-friendly OSI-approved LGPL license. Commercial development support, production support and training for JBoss Cache is available through JBoss, a division of Red Hat Inc. JBoss Cache is a part of JBoss Professional Open Source JEMS (JBoss Enterprise Middleware Suite).

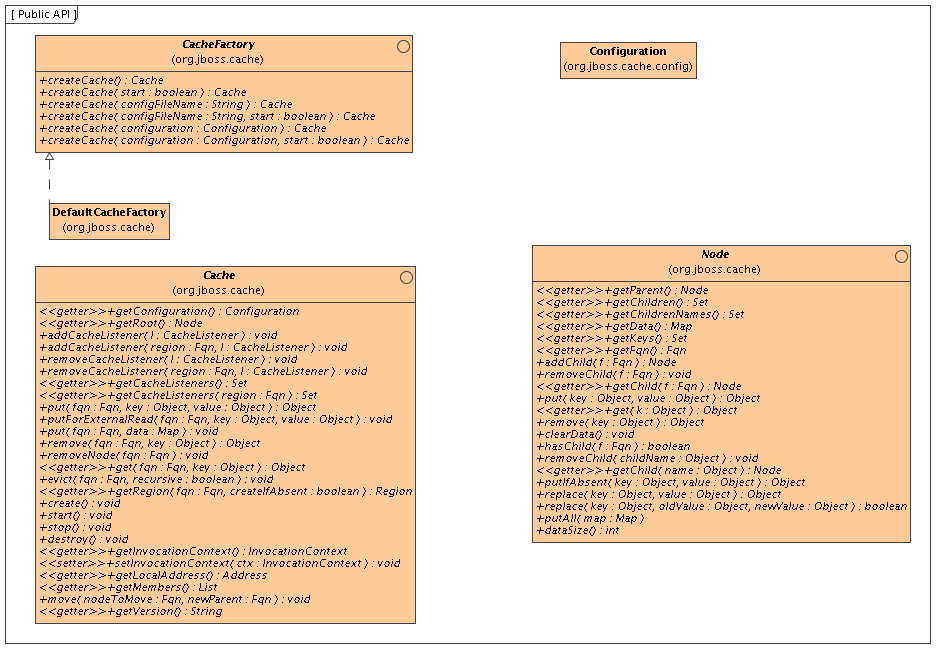

The Cache interface is the primary mechanism for interacting with JBoss Cache. It is constructed and optionally started using the CacheFactory . The CacheFactory allows you to create a Cache either from a Configuration object or an XML file. Once you have a reference to a Cache , you can use it to look up Node objects in the tree structure, and store data in the tree.

Reviewing the javadoc for the above interfaces is the best way to learn the API. Below we cover some of the main points.

An instance of the Cache interface can only be created via a CacheFactory . (This is unlike JBoss Cache 1.x, where an instance of the old TreeCache class could be directly instantiated.)

CacheFactory provides a number of overloaded methods for creating a Cache , but they all do the same thing:

- Gain access to a Configuration , either by having one passed in as a method parameter, or by parsing XML content and constructing one. The XML content can come from a provided input stream or from a classpath or filesystem location. See the chapter on Configuration for more on obtaining a Configuration .

- Instantiate the Cache and provide it with a reference to the Configuration .

- Optionally invoke the cache's create() and start() methods.

An example of the simplest mechanism for creating and starting a cache, using the default configuration values:

CacheFactory factory = DefaultCacheFactory.getInstance();

Cache cache = factory.createCache();

Here we tell the CacheFactory to find and parse a configuration file on the classpath:

CacheFactory factory = DefaultCacheFactory.getInstance();

Cache cache = factory.createCache("cache-configuration.xml");

Here we configure the cache from a file, but want to programatically change a configuration element. So, we tell the factory not to start the cache, and instead do it ourselves:

CacheFactory factory = DefaultCacheFactory.getInstance();

Cache cache = factory.createCache("cache-configuration.xml", false);

Configuration config = cache.getConfiguration();

config.setClusterName(this.getClusterName());

// Have to create and start cache before using it

cache.create();

cache.start();

Next, let's use the Cache API to access a Node in the cache and then do some simple reads and writes to that node.

// Let's get ahold of the root node.

Node rootNode = cache.getRoot();

// Remember, JBoss Cache stores data in a tree structure.

// All nodes in the tree structure are identified by Fqn objects.

Fqn peterGriffinFqn = Fqn.fromString("/griffin/peter");

// Create a new Node

Node peterGriffin = rootNode.addChild(peterGriffinFqn);

// let's store some data in the node

peterGriffin.put("isCartoonCharacter", Boolean.TRUE);

peterGriffin.put("favouriteDrink", new Beer());

// some tests (just assume this code is in a JUnit test case)

assertTrue(peterGriffin.get("isCartoonCharacter"));

assertEquals(peterGriffinFqn, peterGriffin.getFqn());

assertTrue(rootNode.hasChild(peterGriffinFqn));

Set keys = new HashSet();

keys.add("isCartoonCharacter");

keys.add("favouriteDrink");

assertEquals(keys, peterGriffin.getKeys());

// let's remove some data from the node

peterGriffin.remove("favouriteDrink");

assertNull(peterGriffin.get("favouriteDrink");

// let's remove the node altogether

rootNode.removeChild(peterGriffinFqn);

assertFalse(rootNode.hasChild(peterGriffinFqn));

The Cache interface also exposes put/get/remove operations that take an Fqn as an argument:

Fqn peterGriffinFqn = Fqn.fromString("/griffin/peter");

cache.put(peterGriffinFqn, "isCartoonCharacter", Boolean.TRUE);

cache.put(peterGriffinFqn, "favouriteDrink", new Beer());

assertTrue(peterGriffin.get(peterGriffinFqn, "isCartoonCharacter"));

assertTrue(cache.getRootNode().hasChild(peterGriffinFqn));

cache.remove(peterGriffinFqn, "favouriteDrink");

assertNull(cache.get(peterGriffinFqn, "favouriteDrink");

cache.removeNode(peterGriffinFqn);

assertFalse(cache.getRootNode().hasChild(peterGriffinFqn));

The previous section used the Fqn class in its examples; now let's learn a bit more about that class.

A Fully Qualified Name (Fqn) encapsulates a list of names which represent a path to a particular location in the cache's tree structure. The elements in the list are typically String s but can be any Object or a mix of different types.

This path can be absolute (i.e., relative to the root node), or relative to any node in the cache. Reading the documentation on each API call that makes use of Fqn will tell you whether the API expects a relative or absolute Fqn .

The Fqn class provides are variety of constructors; see the javadoc for all the possibilities. The following illustrates the most commonly used approaches to creating an Fqn:

// Create an Fqn pointing to node 'Joe' under parent node 'Smith'

// under the 'people' section of the tree

// Parse it from a String

Fqn<String> abc = Fqn.fromString("/people/Smith/Joe/");

// Build it directly. A bit more efficient to construct than parsing

String[] strings = new String[] { "people", "Smith", "Joe" };

Fqn<String> abc = new Fqn<String>(strings);

// Here we want to use types other than String

Object[] objs = new Object[]{ "accounts", "NY", new Integer(12345) };

Fqn<Object> acctFqn = new Fqn<Object>(objs);

Note that

Fqn<String> f = new Fqn<String>("/a/b/c");

is not the same as

Fqn<String> f = Fqn.fromString("/a/b/c");

The former will result in an Fqn with a single element, called "/a/b/c" which hangs directly under the cache root. The latter will result in a 3 element Fqn, where "c" idicates a child of "b", which is a child of "a", and "a" hangs off the cache root. Another way to look at it is that the "/" separarator is only parsed when it forms part of a String passed in to Fqn.fromString() and not otherwise.

The JBoss Cache API in the 1.x releases included many overloaded convenience methods that took a string in the "/a/b/c" format in place of an Fqn . In the interests of API simplicity, no such convenience methods are available in the JBC 2.x API.

It is good practice to stop and destroy your cache when you are done using it, particularly if it is a clustered cache and has thus used a JGroups channel. Stopping and destroying a cache ensures resources like the JGroups channel are properly cleaned up.

cache.stop();

cache.destroy();

Not also that a cache that has had stop() invoked on it can be started again with a new call to start() . Similarly, a cache that has had destroy() invoked on it can be created again with a new call to create() (and then started again with a start() call).

Although technically not part of the API, the mode in which the cache is configured to operate affects the cluster-wide behavior of any put or remove operation, so we'll briefly mention the various modes here.

JBoss Cache modes are denoted by the org.jboss.cache.config.Configuration.CacheMode enumeration. They consist of:

- LOCAL - local, non-clustered cache. Local caches don't join a cluster and don't communicate with other caches in a cluster. Therefore their contents don't need to be Serializable; however, we recommend making them Serializable, allowing you the flexibility to change the cache mode at any time.

- REPL_SYNC - synchronous replication. Replicated caches replicate all changes to the other caches in the cluster. Synchronous replication means that changes are replicated and the caller blocks until replication acknowledgements are received.

- REPL_ASYNC - asynchronous replication. Similar to REPL_SYNC above, replicated caches replicate all changes to the other caches in the cluster. Being asynchronous, the caller does not block until replication acknowledgements are received.

- INVALIDATION_SYNC - if a cache is configured for invalidation rather than replication, every time data is changed in a cache other caches in the cluster receive a message informing them that their data is now stale and should be evicted from memory. This reduces replication overhead while still being able to invalidate stale data on remote caches.

- INVALIDATION_ASYNC - as above, except this invalidation mode causes invalidation messages to be broadcast asynchronously.

See the chapter on Clustering for more details on how the cache's mode affects behavior. See the chapter on Configuration for info on how to configure things like the cache's mode.

The @org.jboss.cache.notifications.annotation.CacheListener annotation is a convenient mechanism for receiving notifications from a cache about events that happen in the cache. Classes annotated with @CacheListener need to be public classes. In addition, the class needs to have one or more methods annotated with one of the method-level annotations (in the org.jboss.cache.notifications.annotation package). Methods annotated as such need to be public, have a void return type, and accept a single parameter of type org.jboss.cache.notifications.event.Event or one of it's subtypes.

@CacheStarted - methods annotated such receive a notification when the cache is started. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.CacheStartedEvent .

@CacheStopped - methods annotated such receive a notification when the cache is stopped. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.CacheStoppedEvent .

@NodeCreated - methods annotated such receive a notification when a node is created. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeCreatedEvent .

@NodeRemoved - methods annotated such receive a notification when a node is removed. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeRemovedEvent .

@NodeModified - methods annotated such receive a notification when a node is modified. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeModifiedEvent .

@NodeMoved - methods annotated such receive a notification when a node is moved. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeMovedEvent .

@NodeVisited - methods annotated such receive a notification when a node is started. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeVisitedEvent .

@NodeLoaded - methods annotated such receive a notification when a node is loaded from a CacheLoader . Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeLoadedEvent .

@NodeEvicted - methods annotated such receive a notification when a node is evicted from memory. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeEvictedEvent .

@NodeActivated - methods annotated such receive a notification when a node is activated. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodeActivatedEvent .

@NodePassivated - methods annotated such receive a notification when a node is passivated. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.NodePassivatedEvent .

@TransactionRegistered - methods annotated such receive a notification when the cache registers a javax.transaction.Synchronization with a registered transaction manager. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.TransactionRegisteredEvent .

@TransactionCompleted - methods annotated such receive a notification when the cache receives a commit or rollback call from a registered transaction manager. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.TransactionCompletedEvent .

@ViewChanged - methods annotated such receive a notification when the group structure of the cluster changes. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.ViewChangedEvent .

@CacheBlocked - methods annotated such receive a notification when the cluster requests that cache operations are blocked for a state transfer event. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.CacheBlockedEvent .

@CacheUnblocked - methods annotated such receive a notification when the cluster requests that cache operations are unblocked after a state transfer event. Methods need to accept a parameter type which is assignable from org.jboss.cache.notifications.event.CacheUnblockedEvent .

Refer to the javadocs on the annotations as well as the Event subtypes for details of what is passed in to your method, and when.

Example:

@CacheListener

public class MyListener

{

@CacheStarted

@CacheStopped

public void cacheStartStopEvent(Event e)

{

switch (e.getType())

{

case Event.Type.CACHE_STARTED:

System.out.println("Cache has started");

break;

case Event.Type.CACHE_STOPPED:

System.out.println("Cache has stopped");

break;

}

}

@NodeCreated

@NodeRemoved

@NodeVisited

@NodeModified

@NodeMoved

public void logNodeEvent(NodeEvent ne)

{

log("An event on node " + ne.getFqn() + " has occured");

}

}

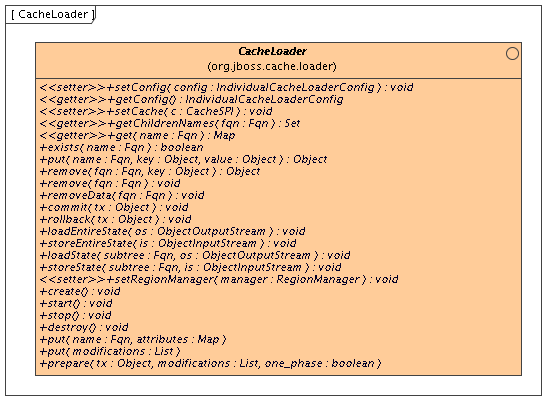

Cache loaders are an important part of JBoss Cache. They allow persistence of nodes to disk or to remote cache clusters, and allow for passivation when caches run out of memory. In addition, cache loaders allow JBoss Cache to perform 'warm starts', where in-memory state can be preloaded from persistent storage. JBoss Cache ships with a number of cache loader implementations.

- org.jboss.cache.loader.FileCacheLoader - a basic, filesystem based cache loader that persists data to disk. Non-transactional and not very performant, but a very simple solution. Used mainly for testing and not recommended for production use.

- org.jboss.cache.loader.JDBCCacheLoader - uses a JDBC connection to store data. Connections could be created and maintained in an internal pool (uses the c3p0 pooling library) or from a configured DataSource. The database this CacheLoader connects to could be local or remotely located.

- org.jboss.cache.loader.BdbjeCacheLoader - uses Oracle's BerkeleyDB file-based transactional database to persist data. Transactional and very performant, but potentially restrictive license.

- org.jboss.cache.loader.JdbmCacheLoader - an upcoming open source alternative to the BerkeleyDB.

- org.jboss.cache.loader.tcp.TcpCacheLoader - uses a TCP socket to "persist" data to a remote cluster, using a "far cache" pattern. [1]

- org.jboss.cache.loader.ClusteredCacheLoader - used as a "read-only" CacheLoader, where other nodes in the cluster are queried for state.

These CacheLoaders, along with advanced aspects and tuning issues, are discussed in the chapter dedicated to CacheLoaders .

Eviction policies are the counterpart to CacheLoaders. They are necessary to make sure the cache does not run out of memory and when the cache starts to fill, the eviction algorithm running in a separate thread offloads in-memory state to the CacheLoader and frees up memory. Eviction policies can be configured on a per-region basis, so different subtrees in the cache could have different eviction preferences. JBoss Cache ships with several eviction policies:

- org.jboss.cache.eviction.LRUPolicy - an eviction policy that evicts the least recently used nodes when thresholds are hit.

- org.jboss.cache.eviction.LFUPolicy - an eviction policy that evicts the least frequently used nodes when thresholds are hit.

- org.jboss.cache.eviction.MRUPolicy - an eviction policy that evicts the most recently used nodes when thresholds are hit.

- org.jboss.cache.eviction.FIFOPolicy - an eviction policy that creates a first-in-first-out queue and evicts the oldest nodes when thresholds are hit.

- org.jboss.cache.eviction.ExpirationPolicy - an eviction policy that selects nodes for eviction based on an expiry time each node is configured with.

- org.jboss.cache.eviction.ElementSizePolicy - an eviction policy that selects nodes for eviction based on the number of key/value pairs held in the node.

Detailed configuration and implementing custom eviction policies are discussed in the chapter dedicated to eviction policies. .

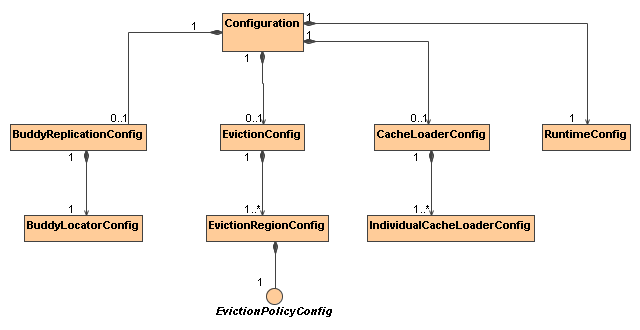

The org.jboss.cache.config.Configuration class (along with its component parts ) is a Java Bean that encapsulates the configuration of the Cache and all of its architectural elements (cache loaders, evictions policies, etc.)

The Configuration exposes numerous properties which are summarized in the configuration reference section of this book and many of which are discussed in later chapters. Any time you see a configuration option discussed in this book, you can assume that the Configuration class or one of its component parts exposes a simple property setter/getter for that configuration option.

As discussed in the User API section , before a Cache can be created, the CacheFactory must be provided with a Configuration object or with a file name or input stream to use to parse a Configuration from XML. The following sections describe how to accomplish this.

The most convenient way to configure JBoss Cache is via an XML file. The JBoss Cache distribution ships with a number of configuration files for common use cases. It is recommended that these files be used as a starting point, and tweaked to meet specific needs.

Here is a simple example configuration file:

<?xml version="1.0" encoding="UTF-8"?>

<!-- ===================================================================== -->

<!-- -->

<!-- Sample JBoss Cache Service Configuration -->

<!-- -->

<!-- ===================================================================== -->

<server>

<mbean code="org.jboss.cache.jmx.CacheJmxWrapper" name="jboss.cache:service=Cache">

<!-- Configure the TransactionManager -->

<attribute name="TransactionManagerLookupClass">

org.jboss.cache.transaction.GenericTransactionManagerLookup

</attribute>

<!-- Node locking level : SERIALIZABLE

REPEATABLE_READ (default)

READ_COMMITTED

READ_UNCOMMITTED

NONE -->

<attribute name="IsolationLevel">READ_COMMITTED</attribute>

<!-- Lock parent before doing node additions/removes -->

<attribute name="LockParentForChildInsertRemove">true</attribute>

<!-- Valid modes are LOCAL (default)

REPL_ASYNC

REPL_SYNC

INVALIDATION_ASYNC

INVALIDATION_SYNC -->

<attribute name="CacheMode">LOCAL</attribute>

<!-- Max number of milliseconds to wait for a lock acquisition -->

<attribute name="LockAcquisitionTimeout">15000</attribute>

<!-- Specific eviction policy configurations. This is LRU -->

<attribute name="EvictionConfig">

<config>

<attribute name="wakeUpIntervalSeconds">5</attribute>

<attribute name="policyClass">org.jboss.cache.eviction.LRUPolicy</attribute>

<!-- Cache wide default -->

<region name="/_default_">

<attribute name="maxNodes">5000</attribute>

<attribute name="timeToLiveSeconds">1000</attribute>

</region>

</config>

</attribute>

</mbean>

</server>

Another, more complete, sample XML file is included in the configuration reference section of this book, along with a handy look-up table explaining the various options.

For historical reasons, the format of the JBoss Cache configuraton file follows that of a JBoss AS Service Archive (SAR) deployment descriptor (and still can be used as such inside JBoss AS ). Because of this dual usage, you may see elements in some configuration files (such as depends or classpath ) that are not relevant outside JBoss AS. These can safely be ignored.

Here's how you tell the CacheFactory to create and start a cache by finding and parsing a configuration file on the classpath:

CacheFactory factory = DefaultCacheFactory.getInstance();

Cache cache = factory.createCache("cache-configuration.xml");

In addition to the XML-based configuration above, the Configuration can be built up programatically, using the simple property mutators exposed by Configuration and its components. When constructed, the Configuration object is preset with JBoss Cache defaults and can even be used as-is for a quick start.

Following is an example of programatically creating a Configuration configured to match the one produced by the XML example above, and then using it to create a Cache :

Configuration config = new Configuration();

String tmlc = GenericTransactionManagerLookup.class.getName();

config.setTransactionManagerLookupClass(tmlc);

config.setIsolationLevel(IsolationLevel.READ_COMMITTED);

config.setCacheMode(CacheMode.LOCAL);

config.setLockParentForChildInsertRemove(true);

config.setLockAcquisitionTimeout(15000);

EvictionConfig ec = new EvictionConfig();

ec.setWakeupIntervalSeconds(5);

ec.setDefaultEvictionPolicyClass(LRUPolicy.class.getName());

EvictionRegionConfig erc = new EvictionRegionConfig();

erc.setRegionName("_default_");

LRUConfiguration lru = new LRUConfiguration();

lru.setMaxNodes(5000);

lru.setTimeToLiveSeconds(1000);

erc.setEvictionPolicyConfig(lru);

List<EvictionRegionConfig> ercs = new ArrayList<EvictionRegionConfig>();

ercs.add(erc);

ec.setEvictionRegionConfigs(erc);

config.setEvictionConfig(ec);

CacheFactory factory = DefaultCacheFactory.getInstance();

Cache cache = factory.createCache(config);

Even the above fairly simple configuration is pretty tedious programming; hence the preferred use of XML-based configuration. However, if your application requires it, there is no reason not to use XML-based configuration for most of the attributes, and then access the Configuration object to programatically change a few items from the defaults, add an eviction region, etc.

Note that configuration values may not be changed programmatically when a cache is running, except those annotated as @Dynamic . Dynamic properties are also marked as such in the configuration reference table. Attempting to change a non-dynamic property will result in a ConfigurationException .

The Configuration class and its component parts are all Java Beans that expose all config elements via simple setters and getters. Therefore, any good IOC framework should be able to build up a Configuration from an XML file in the framework's own format. See the deployment via the JBoss micrcontainer section for an example of this.

A Configuration is composed of a number of subobjects:

Following is a brief overview of the components of a Configuration . See the javadoc and the linked chapters in this book for a more complete explanation of the configurations associated with each component.

- Configuration : top level object in the hierarchy; exposes the configuration properties listed in the configuration reference section of this book.

- BuddyReplicationConfig : only relevant if buddy replication is used. General buddy replication configuration options. Must include a:

- BuddyLocatorConfig : implementation-specific configuration object for the BuddyLocator implementation being used. What configuration elements are exposed depends on the needs of the BuddyLocator implementation.

- EvictionConfig : only relevant if eviction is used. General eviction configuration options. Must include at least one:

- EvictionRegionConfig : one for each eviction region; names the region, etc. Must include a:

- EvictionPolicyConfig : implementation-specific configuration object for the EvictionPolicy implementation being used. What configuration elements are exposed depends on the needs of the EvictionPolicy implementation.

- CacheLoaderConfig : only relevant if a cache loader is used. General cache loader configuration options. Must include at least one:

- IndividualCacheLoaderConfig : implementation-specific configuration object for the CacheLoader implementation being used. What configuration elements are exposed depends on the needs of the CacheLoader implementation.

- RuntimeConfig : exposes to cache clients certain information about the cache's runtime environment (e.g. membership in buddy replication groups if buddy replication is used.) Also allows direct injection into the cache of needed external services like a JTA TransactionManager or a JGroups ChannelFactory .

Dynamically changing the configuration of some options while the cache is running is supported, by programmatically obtaining the Configuration object from the running cache and changing values. E.g.,

Configuration liveConfig = cache.getConfiguration();

liveConfig.setLockAcquisitionTimeout(2000);

A complete listing of which options may be changed dynamically is in the configuration reference section. An org.jboss.cache.config.ConfigurationException will be thrown if you attempt to change a setting that is not dynamic.

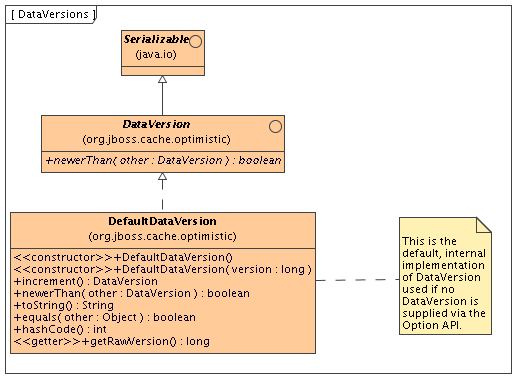

The Option API allows you to override certain behaviours of the cache on a per invocation basis. This involves creating an instance of org.jboss.cache.config.Option , setting the options you wish to override on the Option object and passing it in the InvocationContext before invoking your method on the cache.

E.g., to override the default node versioning used with optimistic locking:

DataVersion v = new MyCustomDataVersion();

cache.getInvocationContext().getOptionOverrides().setDataVersion(v);

Node ch = cache.getRoot().addChild(Fqn.fromString("/a/b/c"));

E.g., to suppress replication of a put call in a REPL_SYNC cache:

Node node = cache.getChild(Fqn.fromString("/a/b/c"));

cache.getInvocationContext().getOptionOverrides().setLocalOnly(true);

node.put("localCounter", new Integer(2));

See the javadocs on the Option class for details on the options available.

When used in a standalone Java program, all that needs to be done is to instantiate the cache using the CacheFactory and a Configuration instance or an XML file, as discussed in the User API and Configuration chapters.

The same techniques can be used when an application running in an application server wishes to programatically deploy a cache rather than relying on an application server's deployment features. An example of this would be a webapp deploying a cache via a javax.servlet.ServletContextListener .

If, after deploying your cache you wish to expose a management interface to it in JMX, see the section on Programatic Registration in JMX .

If JBoss Cache is run in JBoss AS then the cache can be deployed as an MBean simply by copying a standard cache configuration file to the server's deploy directory. The standard format of JBoss Cache's standard XML configuration file (as shown in the Configuration Reference ) is the same as a JBoss AS MBean deployment descriptor, so the AS's SAR Deployer has no trouble handling it. Also, you don't have to place the configuration file directly in deploy ; you can package it along with other services or JEE components in a SAR or EAR.

In AS 5, if you're using a server config based on the standard all config, then that's all you need to do; all required jars will be on the classpath. Otherwise, you will need to ensure jbosscache.jar and jgroups-all.jar are on the classpath. You may need to add other jars if you're using things like JdbmCacheLoader . The simplest way to do this is to copy the jars from the JBoss Cache distribution's lib directory to the server config's lib directory. You could also package the jars with the configuration file in Service Archive (.sar) file or an EAR.

It is possible to deploy a JBoss Cache 2.0 instance in JBoss AS 4.x (at least in 4.2.0.GA; other AS releases are completely untested). However, the significant API changes between the JBoss Cache 2.x and 1.x releases mean none of the standard AS 4.x clustering services (e.g. http session replication) that rely on JBoss Cache will work with JBoss Cache 2.x. Also, be aware that usage of JBoss Cache 2.x in AS 4.x is not something the JBoss Cache developers are making any significant effort to test, so be sure to test your application well (which of course you're doing anyway.)

Note in the example the value of the mbean element's code attribute: org.jboss.cache.jmx.CacheJmxWrapper . This is the class JBoss Cache uses to handle JMX integration; the Cache itself does not expose an MBean interface. See the JBoss Cache MBeans section for more on the CacheJmxWrapper .

Once your cache is deployed, in order to use it with an in-VM client such as a servlet, a JMX proxy can be used to get a reference to the cache:

MBeanServer server = MBeanServerLocator.locateJBoss();

ObjectName on = new ObjectName("jboss.cache:service=Cache");

CacheJmxWrapperMBean cacheWrapper =

(CacheJmxWrapperMBean) MBeanServerInvocationHandler.newProxyInstance(server, on,

CacheJmxWrapperMBean.class, false);

Cache cache = cacheWrapper.getCache();

Node root = cache.getRoot(); // etc etc

The MBeanServerLocator class is a helper to find the (only) JBoss MBean server inside the current JVM. The javax.management.MBeanServerInvocationHandler class' newProxyInstance method creates a dynamic proxy implementing the given interface and uses JMX to dynamically dispatch methods invoked against the generated interface to the MBean. The name used to look up the MBean is the same as defined in the cache's configuration file.

Once the proxy to the CacheJmxWrapper is obtained, the getCache() will return a reference to the Cache itself.

Beginning with AS 5, JBoss AS also supports deployment of POJO services via deployment of a file whose name ends with -beans.xml . A POJO service is one whose implementation is via a "Plain Old Java Object", meaning a simple java bean that isn't required to implement any special interfaces or extend any particular superclass. A Cache is a POJO service, and all the components in a Configuration are also POJOS, so deploying a cache in this way is a natural step.

Deployment of the cache is done using the JBoss Microcontainer that forms the core of JBoss AS. JBoss Microcontainer is a sophisticated IOC framework (similar to Spring). A -beans.xml file is basically a descriptor that tells the IOC framework how to assemble the various beans that make up a POJO service.

The rules for how to deploy the file, how to package it, how to ensure the required jars are on the classpath, etc. are the same as for a JMX-based deployment .

Following is an example -beans.xml file. If you look in the server/all/deploy directory of an AS 5 installation, you can find several more examples.

<?xml version="1.0" encoding="UTF-8"?>

<deployment xmlns="urn:jboss:bean-deployer:2.0">

<!-- First we create a Configuration object for the cache -->

<bean name="ExampleCacheConfig"

class="org.jboss.cache.config.Configuration">

<!-- Externally injected services -->

<property name="runtimeConfig">

<bean name="ExampleCacheRuntimeConfig" class="org.jboss.cache.config.RuntimeConfig">

<property name="transactionManager">

<inject bean="jboss:service=TransactionManager"

property="TransactionManager"/>

</property>

<property name="muxChannelFactory"><inject bean="JChannelFactory"/></property>

</bean>

</property>

<property name="multiplexerStack">udp</property>

<property name="clusterName">Example-EntityCache</property>

<!--

Node locking level : SERIALIZABLE

REPEATABLE_READ (default)

READ_COMMITTED

READ_UNCOMMITTED

NONE

-->

<property name="isolationLevel">REPEATABLE_READ</property>

<!-- Valid modes are LOCAL

REPL_ASYNC

REPL_SYNC

-->

<property name="cacheMode">REPL_SYNC</property>

<!-- The max amount of time (in milliseconds) we wait until the

initial state (ie. the contents of the cache) are retrieved from

existing members in a clustered environment

-->

<property name="initialStateRetrievalTimeout">15000</property>

<!-- Number of milliseconds to wait until all responses for a

synchronous call have been received.

-->

<property name="syncReplTimeout">20000</property>

<!-- Max number of milliseconds to wait for a lock acquisition -->

<property name="lockAcquisitionTimeout">15000</property>

<property name="exposeManagementStatistics">true</property>

<!-- Must be true if any entity deployment uses a scoped classloader -->

<property name="useRegionBasedMarshalling">true</property>

<!-- Must match the value of "useRegionBasedMarshalling" -->

<property name="inactiveOnStartup">true</property>

<!-- Specific eviction policy configurations. This is LRU -->

<property name="evictionConfig">

<bean name="ExampleEvictionConfig"

class="org.jboss.cache.config.EvictionConfig">

<property name="defaultEvictionPolicyClass">

org.jboss.cache.eviction.LRUPolicy

</property>

<property name="wakeupIntervalSeconds">5</property>

<property name="evictionRegionConfigs">

<list>

<bean name="ExampleDefaultEvictionRegionConfig"

class="org.jboss.cache.config.EvictionRegionConfig">

<property name="regionName">/_default_</property>

<property name="evictionPolicyConfig">

<bean name="ExampleDefaultLRUConfig"

class="org.jboss.cache.eviction.LRUConfiguration">

<property name="maxNodes">5000</property>

<property name="timeToLiveSeconds">1000</property>

</bean>

</property>

</bean>

</list>

</property>

</bean>

</property>

</bean>

<!-- Factory to build the Cache. -->

<bean name="DefaultCacheFactory" class="org.jboss.cache.DefaultCacheFactory">

<constructor factoryClass="org.jboss.cache.DefaultCacheFactory"

factoryMethod="getInstance"/>

</bean>

<!-- The cache itself -->

<bean name="ExampleCache" class="org.jboss.cache.CacheImpl">

<constructor factoryMethod="createCache">

<factory bean="DefaultCacheFactory"/>

<parameter><inject bean="ExampleCacheConfig"/></parameter>

<parameter>false</false>

</constructor>

</bean>

</deployment>

See the JBoss Microcontainer documentation [2] for details on the above syntax. Basically, each bean element represents an object; most going to create a Configuration and its constituent parts .

An interesting thing to note in the above example is the use of the RuntimeConfig object. External resources like a TransactionManager and a JGroups ChannelFactory that are visible to the microcontainer are dependency injected into the RuntimeConfig . The assumption here is that in some other deployment descriptor in the AS, the referenced beans have been described.

With the 1.x JBoss Cache releases, a proxy to the cache could be bound into JBoss AS's JNDI tree using the AS's JRMPProxyFactory service. With JBoss Cache 2.x, this no longer works. An alternative way of doing a similar thing with a POJO (i.e. non-JMX-based) service like a Cache is under development by the JBoss AS team [3] . That feature is not available as of the time of this writing, although it will be completed before AS 5.0.0.GA is released. We will add a wiki page describing how to use it once it becomes available.

JBoss Cache includes JMX MBeans to expose cache functionality and provide statistics that can be used to analyze cache operations. JBoss Cache can also broadcast cache events as MBean notifications for handling via JMX monitoring tools.

JBoss Cache provides an MBean that can be registered with your environments JMX server to allow access to the cache instance via JMX. This MBean is the org.jboss.cache.jmx.CacheJmxWrapper . It is a StandardMBean, so it's MBean interface is org.jboss.cache.jmx.CacheJmxWrapperMBean . This MBean can be used to:

- Get a reference to the underlying Cache .

- Invoke create/start/stop/destroy lifecycle operations on the underlying Cache .

- Inspect various details about the cache's current state (number of nodes, lock information, etc.)

- See numerous details about the cache's configuration, and change those configuration items that can be changed when the cache has already been started.

See the CacheJmxWrapperMBean javadoc for more details.

It is important to note a significant architectural difference between JBoss Cache 1.x and 2.x. In 1.x, the old TreeCache class was itself an MBean, and essentially exposed the cache's entire API via JMX. In 2.x, JMX has been returned to it's fundamental role as a management layer. The Cache object itself is completely unaware of JMX; instead JMX functionality is added through a wrapper class designed for that purpose. Furthermore, the interface exposed through JMX has been limited to management functions; the general Cache API is no longer exposed through JMX. For example, it is no longer possible to invoke a cache put or get via the JMX interface.

If a CacheJmxWrapper is registered, JBoss Cache also provides MBeans for each interceptor configured in the cache's interceptor stack. These MBeans are used to capture and expose statistics related to cache operations. They are hierarchically associated with the CacheJmxWrapper MBean and have service names that reflect this relationship. For example, a replication interceptor MBean for the jboss.cache:service=TomcatClusteringCache instance will be accessible through the service named jboss.cache:service=TomcatClusteringCache,cache-interceptor=ReplicationInterceptor .

The best way to ensure the CacheJmxWrapper is registered in JMX depends on how you are deploying your cache:

Simplest way to do this is to create your Cache and pass it to the CacheJmxWrapper constructor.

CacheFactory factory = DefaultCacheFactory.getInstance();

// Build but don't start the cache

// (although it would work OK if we started it)

Cache cache = factory.createCache("cache-configuration.xml", false);

CacheJmxWrapperMBean wrapper = new CacheJmxWrapper(cache);

MBeanServer server = getMBeanServer(); // however you do it

ObjectName on = new ObjectName("jboss.cache:service=TreeCache");

server.registerMBean(wrapper, on);

// Invoking lifecycle methods on the wrapper results

// in a call through to the cache

wrapper.create();

wrapper.start();

... use the cache

... on application shutdown

// Invoking lifecycle methods on the wrapper results

// in a call through to the cache

wrapper.stop();

wrapper.destroy();

Alternatively, build a Configuration object and pass it to the CacheJmxWrapper . The wrapper will construct the Cache :

Configuration config = buildConfiguration(); // whatever it does

CacheJmxWrapperMBean wrapper = new CacheJmxWrapper(config);

MBeanServer server = getMBeanServer(); // however you do it

ObjectName on = new ObjectName("jboss.cache:service=TreeCache");

server.registerMBean(wrapper, on);

// Call to wrapper.create() will build the Cache if one wasn't injected

wrapper.create();

wrapper.start();

// Now that it's built, created and started, get the cache from the wrapper

Cache cache = wrapper.getCache();

... use the cache

... on application shutdown

wrapper.stop();

wrapper.destroy();

When you deploy your cache in JBoss AS using a -service.xml file , a CacheJmxWrapper is automatically registered. There is no need to do anything further. The CacheJmxWrapper is accessible from an MBean server through the service name specified in the cache configuration file's mbean element.

CacheJmxWrapper is a POJO, so the microcontainer has no problem creating one. The trick is getting it to register your bean in JMX. This can be done by specifying the org.jboss.aop.microcontainer.aspects.jmx.JMX annotation on the CacheJmxWrapper bean:

<?xml version="1.0" encoding="UTF-8"?>

<deployment xmlns="urn:jboss:bean-deployer:2.0">

<!-- First we create a Configuration object for the cache -->

<bean name="ExampleCacheConfig"

class="org.jboss.cache.config.Configuration">

... build up the Configuration

</bean>

<!-- Factory to build the Cache. -->

<bean name="DefaultCacheFactory" class="org.jboss.cache.DefaultCacheFactory">

<constructor factoryClass="org.jboss.cache.DefaultCacheFactory"

factoryMethod="getInstance"/>

</bean>

<!-- The cache itself -->

<bean name="ExampleCache" class="org.jboss.cache.CacheImpl">

<constructor factoryMethod="createnewInstance">

<factory bean="DefaultCacheFactory"/>

<parameter><inject bean="ExampleCacheConfig"/></parameter>

<parameter>false</false>

</constructor>

</bean>

<!-- JMX Management -->

<bean name="ExampleCacheJmxWrapper" class="org.jboss.cache.jmx.CacheJmxWrapper">

<annotation>@org.jboss.aop.microcontainer.aspects.jmx.JMX(name="jboss.cache:service=ExampleTreeCache",

exposedInterface=org.jboss.cache.jmx.CacheJmxWrapperMBean.class,

registerDirectly=true)</annotation>

<constructor>

<parameter><inject bean="ExampleCache"/></parameter>

</constructor>

</bean>

</deployment>

As discussed in the Programatic Registration section, CacheJmxWrapper can do the work of building, creating and starting the Cache if it is provided with a Configuration . With the microcontainer, this is the preferred approach, as it saves the boilerplate XML needed to create the CacheFactory :

<?xml version="1.0" encoding="UTF-8"?>

<deployment xmlns="urn:jboss:bean-deployer:2.0">

<!-- First we create a Configuration object for the cache -->

<bean name="ExampleCacheConfig"

class="org.jboss.cache.config.Configuration">

... build up the Configuration

</bean>

<bean name="ExampleCache" class="org.jboss.cache.jmx.CacheJmxWrapper">

<annotation>@org.jboss.aop.microcontainer.aspects.jmx.JMX(name="jboss.cache:service=ExampleTreeCache",

exposedInterface=org.jboss.cache.jmx.CacheJmxWrapperMBean.class,

registerDirectly=true)</annotation>

<constructor>

<parameter><inject bean="ExampleCacheConfig"/></parameter>

</constructor>

</bean>

</deployment>

JBoss Cache captures statistics in its interceptors and exposes the statistics through interceptor MBeans. Gathering of statistics is enabled by default; this can be disabled for a specific cache instance through the ExposeManagementStatistics configuration attribute. Note that the majority of the statistics are provided by the CacheMgmtInterceptor , so this MBean is the most significant in this regard. If you want to disable all statistics for performance reasons, you set ExposeManagementStatistics to false as this will prevent the CacheMgmtInterceptor from being included in the cache's interceptor stack when the cache is started.

If a CacheJmxWrapper is registered with JMX, the wrapper also ensures that an MBean is registered in JMX for each interceptor that exposes statistics [4] . Management tools can then access those MBeans to examine the statistics. See the section in the JMX Reference chapter pertaining to the statistics that are made available via JMX.

The name under which the interceptor MBeans will be registered is derived by taking the ObjectName under which the CacheJmxWrapper is registered and adding a cache-interceptor attribute key whose value is the non-qualified name of the interceptor class. So, for example, if the CacheJmxWrapper were registered under jboss.cache:service=TreeCache , the name of the CacheMgmtInterceptor MBean would be jboss.cache:service=TreeCache,cache-interceptor=CacheMgmtInterceptor .

Each interceptor's MBean exposes a StatisticsEnabled attribute that can be used to disable maintenance of statistics for that interceptor. In addition, each interceptor MBean provides the following common operations and attributes.

- dumpStatistics - returns a Map containing the interceptor's attributes and values.

- resetStatistics - resets all statistics maintained by the interceptor.

- setStatisticsEnabled(boolean) - allows statistics to be disabled for a specific interceptor.

JBoss Cache users can register a listener to receive cache events described earlier in the User API chapter. Users can alternatively utilize the cache's management information infrastructure to receive these events via JMX notifications. Cache events are accessible as notifications by registering a NotificationListener for the CacheJmxWrapper .

See the section in the JMX Reference chapter pertaining to JMX notifications for a list of notifications that can be received through the CacheJmxWrapper .

The following is an example of how to programmatically receive cache notifications when running in a JBoss AS environment. In this example, the client uses a filter to specify which events are of interest.

MyListener listener = new MyListener();

NotificationFilterSupport filter = null;

// get reference to MBean server

Context ic = new InitialContext();

MBeanServerConnection server = (MBeanServerConnection)ic.lookup("jmx/invoker/RMIAdaptor");

// get reference to CacheMgmtInterceptor MBean

String cache_service = "jboss.cache:service=TomcatClusteringCache";

ObjectName mgmt_name = new ObjectName(cache_service);

// configure a filter to only receive node created and removed events

filter = new NotificationFilterSupport();

filter.disableAllTypes();

filter.enableType(CacheNotificationBroadcaster.NOTIF_NODE_CREATED);

filter.enableType(CacheNotificationBroadcaster.NOTIF_NODE_REMOVED);

// register the listener with a filter

// leave the filter null to receive all cache events

server.addNotificationListener(mgmt_name, listener, filter, null);

// ...

// on completion of processing, unregister the listener

server.removeNotificationListener(mgmt_name, listener, filter, null);

The following is the simple notification listener implementation used in the previous example.

private class MyListener implements NotificationListener, Serializable

{

public void handleNotification(Notification notification, Object handback)

{

String message = notification.getMessage();

String type = notification.getType();

Object userData = notification.getUserData();

System.out.println(type + ": " + message);

if (userData == null)

{

System.out.println("notification data is null");

}

else if (userData instanceof String)

{

System.out.println("notification data: " + (String) userData);

}

else if (userData instanceof Object[])

{

Object[] ud = (Object[]) userData;

for (Object data : ud)

{

System.out.println("notification data: " + data.toString());

}

}

else

{

System.out.println("notification data class: " + userData.getClass().getName());

}

}

}

Note that the JBoss Cache management implementation only listens to cache events after a client registers to receive MBean notifications. As soon as no clients are registered for notifications, the MBean will remove itself as a cache listener.

JBoss Cache MBeans are easily accessed when running cache instances in an application server that provides an MBean server interface such as JBoss JMX Console. Refer to your server documentation for instructions on how to access MBeans running in a server's MBean container.

In addition, though, JBoss Cache MBeans are also accessible when running in a non-server environment if the JVM is JDK 5.0 or later. When running a standalone cache in a JDK 5.0 environment, you can access the cache's MBeans as follows.

- Set the system property -Dcom.sun.management.jmxremote when starting the JVM where the cache will run.

- Once the JVM is running, start the JDK 5.0 jconsole utility, located in your JDK's /bin directory.

- When the utility loads, you will be able to select your running JVM and connect to it. The JBoss Cache MBeans will be available on the MBeans panel.

Note that the jconsole utility will automatically register as a listener for cache notifications when connected to a JVM running JBoss Cache instances.

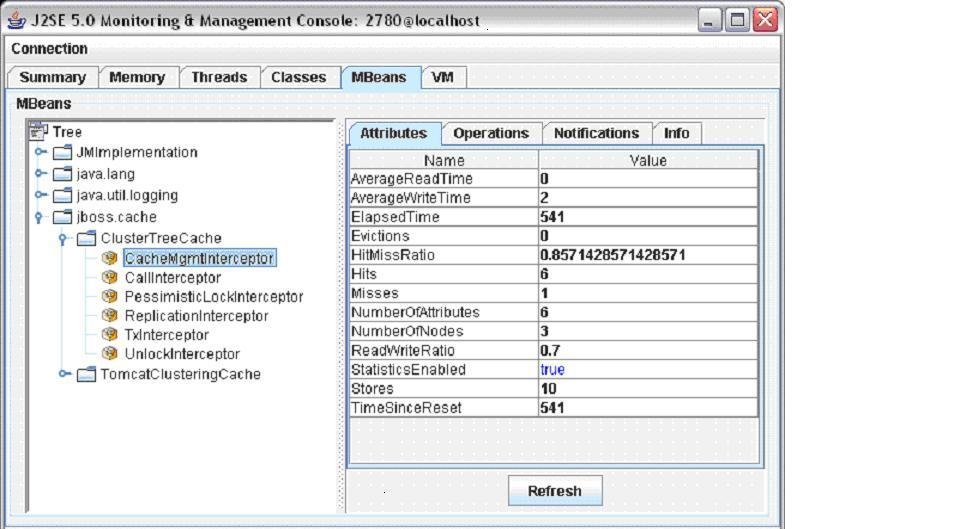

The following figure shows cache interceptor MBeans in jconsole . Cache statistics are displayed for the CacheMgmtInterceptor :

[2] http://labs.jboss.com/jbossmc/docs

[3] http://jira.jboss.com/jira/browse/JBAS-4456

[4] Note that if the CacheJmxWrapper is not registered in JMX, the interceptor MBeans will not be registered either. The JBoss Cache 1.4 releases included code that would try to "discover" an MBeanServer and automatically register the interceptor MBeans with it. For JBoss Cache 2.x we decided that this sort of "discovery" of the JMX environment was beyond the proper scope of a caching library, so we removed this functionality.

Within a major version, releases of JBoss Cache are meant to be compatible and interoperable. Compatible in the sense that it should be possible to upgrade an application from one version to another by simply replacing the jars. Interoperable in the sense that if two different versions of JBoss Cache are used in the same cluster, they should be able to exchange replication and state transfer messages. Note however that interoperability requires use of the same JGroups version in all nodes in the cluster. In most cases, the version of JGroups used by a version of JBoss Cache can be upgraded.

As such, JBoss Cache 2.x.x is not API or binary compatible with prior 1.x.x versions. However, JBoss Cache 2.1.x will be API and binary compatible with 2.0.x.

A configuration attribute, ReplicationVersion, is available and is used to control the wire format of inter-cache communications. They can be wound back from more efficient and newer protocols to "compatible" versions when talking to older releases. This mechanism allows us to improve JBoss Cache by using more efficient wire formats while still providing a means to preserve interoperability.

A compatibility matrix is maintained on the JBoss Cache website, which contains information on different versions of JBoss Cache, JGroups and JBoss AS.

This section digs deeper into the JBoss Cache architecture, and is meant for developers wishing to extend or enhance JBoss Cache, write plugins or are just looking for detailed knowledge of how things work under the hood.

A Cache consists of a collection of Node instances, organised in a tree structure. Each Node contains a Map which holds the data objects to be cached. It is important to note that the structure is a mathematical tree, and not a graph; each Node has one and only one parent, and the root node is denoted by the constant fully qualitied name, Fqn.ROOT .

The reason for organising nodes as such is to improve concurrent access to data and make replication and persistence more fine-grained.

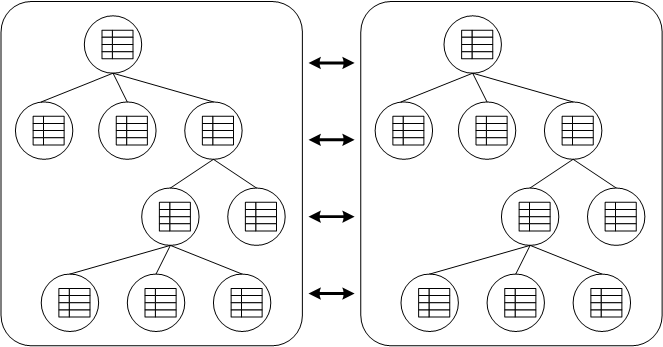

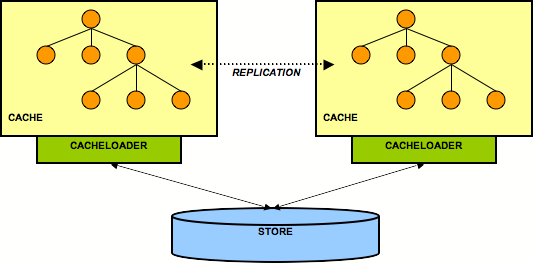

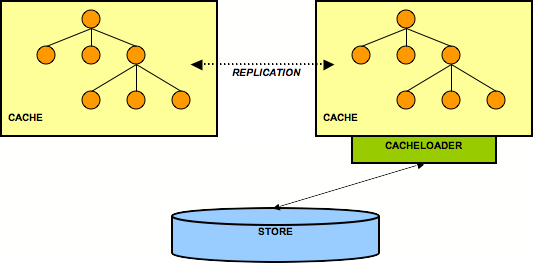

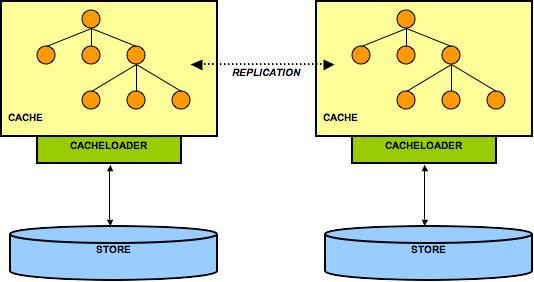

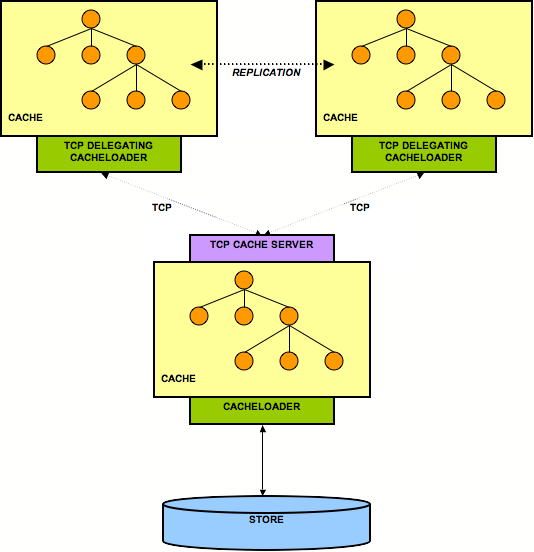

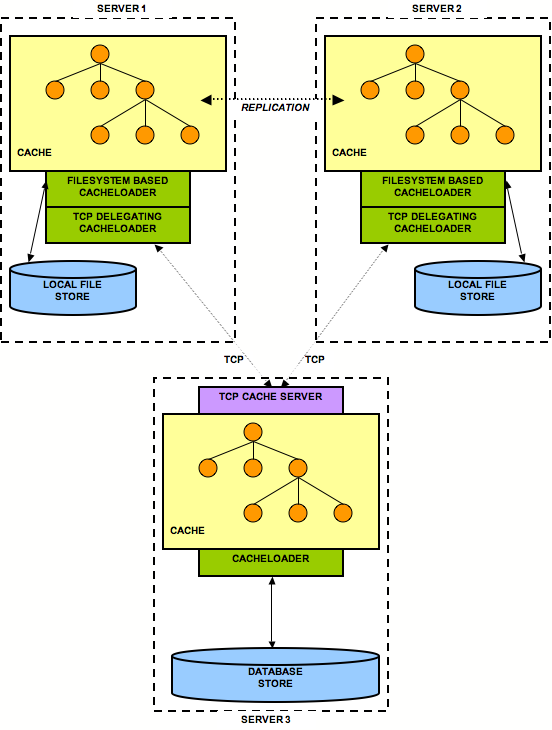

In the diagram above, each box represents a JVM. You see 2 caches in separate JVMs, replicating data to each other. These VMs can be located on the same physical machine, or on 2 different machines connected by a network link. The underlying group communication between networked nodes is done using JGroups .

Any modifications (see API chapter ) in one cache instance will be replicated to the other cache. Naturally, you can have more than 2 caches in a cluster. Depending on the transactional settings, this replication will occur either after each modification or at the end of a transaction, at commit time. When a new cache is created, it can optionally acquire the contents from one of the existing caches on startup.

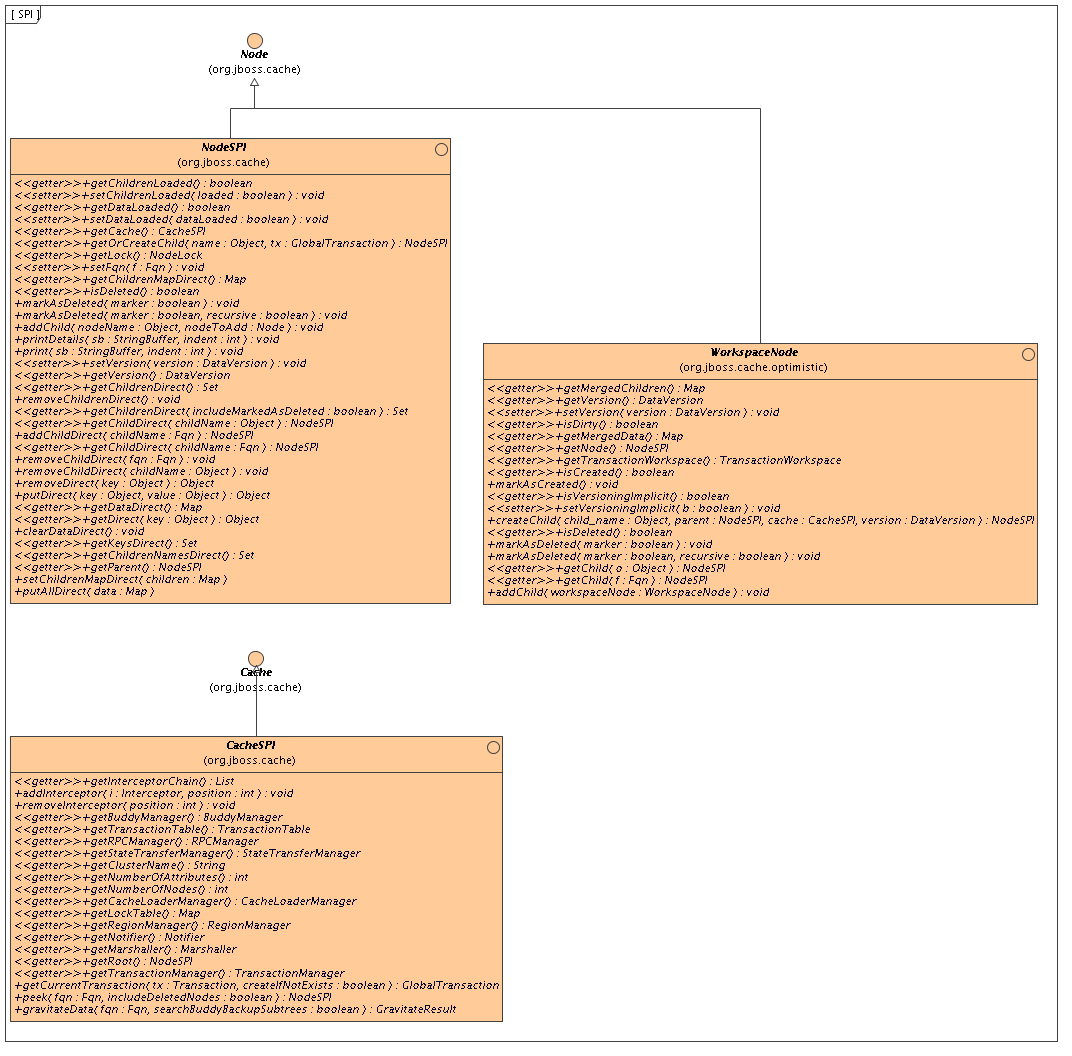

In addition to Cache and Node interfaces, JBoss Cache exposes more powerful CacheSPI and NodeSPI interfaces, which offer more control over the internals of JBoss Cache. These interfaces are not intended for general use, but are designed for people who wish to extend and enhance JBoss Cache, or write custom Interceptor or CacheLoader instances.

The CacheSPI interface cannot be created, but is injected into Interceptor and CacheLoader implementations by the setCache(CacheSPI cache) methods on these interfaces. CacheSPI extends Cache so all the functionality of the basic API is made available.

Similarly, a NodeSPI interface cannot be created. Instead, one is obtained by performing operations on CacheSPI , obtained as above. For example, Cache.getRoot() : Node is overridden as CacheSPI.getRoot() : NodeSPI .

It is important to note that directly casting a Cache or Node to it's SPI counterpart is not recommended and is bad practice, since the inheritace of interfaces it is not a contract that is guaranteed to be upheld moving forward. The exposed public APIs, on the other hand, is guaranteed to be upheld.

Since the cache is essentially a collection of nodes, aspects such as clustering, persistence, eviction, etc. need to be applied to these nodes when operations are invoked on the cache as a whole or on individual nodes. To achieve this in a clean, modular and extensible manner, an interceptor chain is used. The chain is built up of a series of interceptors, each one adding an aspect or particular functionality. The chain is built when the cache is created, based on the configuration used.

It is important to note that the NodeSPI offers some methods (such as the xxxDirect() method family) that operate on a node directly without passing through the interceptor stack. Plugin authors should note that using such methods will affect the aspects of the cache that may need to be applied, such as locking, replication, etc. Basically, don't use such methods unless you really know what you're doing!

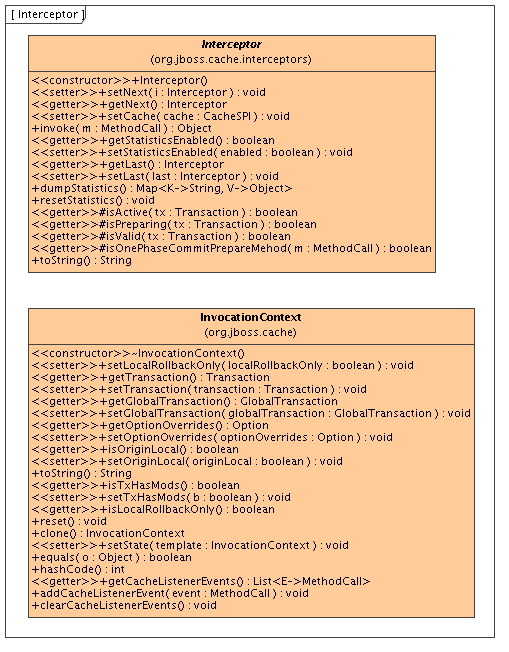

An Interceptor is an abstract class, several of which comprise an interceptor chain. It exposes an invoke() method, which must be overridden by implementing classes to add behaviour to a call before passing the call down the chain by calling super.invoke() .

JBoss Cache ships with several interceptors, representing different configuration options, some of which are:

- TxInterceptor - looks for ongoing transactions and registers with transaction managers to participate in synchronization events

- ReplicationInterceptor - replicates state across a cluster using a JGroups channel

- CacheLoaderInterceptor - loads data from a persistent store if the data requested is not available in memory

The interceptor chain configured for your cache instance can be obtained and inspected by calling CacheSPI.getInterceptorChain() , which returns an ordered List of interceptors.

Custom interceptors to add specific aspects or features can be written by extending Interceptor and overriding invoke() . The custom interceptor will need to be added to the interceptor chain by using the CacheSPI.addInterceptor() method.

Adding custom interceptors via XML is not supported at this time.

org.jboss.cache.marshall.MethodCall is a class that encapsulates a java.lang.reflection.Method and an Object[] representing the method's arguments. It is an extension of the org.jgroups.blocks.MethodCall class, that adds a mechanism for identifying known methods using magic numbers and method ids, which makes marshalling and unmarshalling more efficient and performant.

This is central to the Interceptor architecture, and is the only parameter passed in to Interceptor.invoke() .

InvocationContext holds intermediate state for the duration of a single invocation, and is set up and destroyed by the InvocationContextInterceptor which sits at the start of the chain.

InvocationContext , as its name implies, holds contextual information associated with a single cache method invocation. Contextual information includes associated javax.transaction.Transaction or org.jboss.cache.transaction.GlobalTransaction , method invocation origin ( InvocationContext.isOriginLocal() ) as well as Option overrides .

The InvocationContext can be obtained by calling Cache.getInvocationContext() .

Some aspects and functionality is shared by more than a single interceptor. Some of these have been encapsulated into managers, for use by various interceptors, and are made available by the CacheSPI interface.

This class is responsible for calls made via the JGroups channel for all RPC calls to remote caches, and encapsulates the JGroups channel used.

This class manages buddy groups and invokes group organisation remote calls to organise a cluster of caches into smaller sub-groups.

Early versions of JBoss Cache simply wrote cached data to the network by writing to an ObjectOutputStream during replication. Over various releases in the JBoss Cache 1.x.x series this approach was gradually deprecated in favour of a more mature marshalling framework. In the JBoss Cache 2.x.x series, this is the only officially supported and recommended mechanism for writing objects to datastreams.

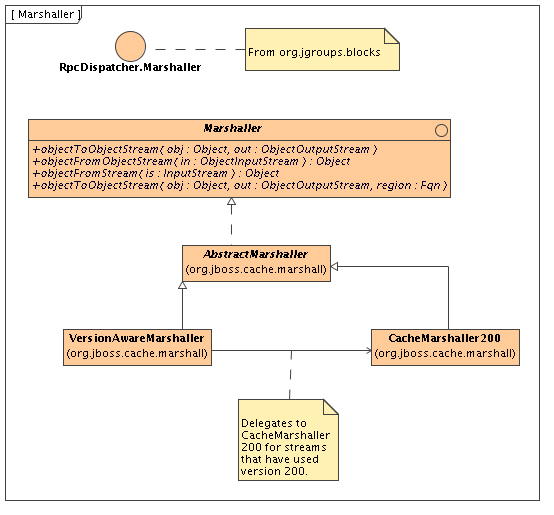

The Marshaller interface extends RpcDispatcher.Marshaller from JGroups. This interface has two main implementations - a delegating VersionAwareMarshaller and a concrete CacheMarshaller200 .

The marshaller can be obtained by calling CacheSPI.getMarshaller() , and defaults to the VersionAwareMarshaller . Users may also write their own marshallers by implementing the Marshaller interface and adding it to their configuration, by using the MarshallerClass configuration attribute.

As the name suggests, this marshaller adds a version short to the start of any stream when writing, enabling similar VersionAwareMarshaller instances to read the version short and know which specific marshaller implementation to delegate the call to. For example, CacheMarshaller200 , is the marshaller for JBoss Cache 2.0.x. JBoss Cache 2.1.x, say, may ship with CacheMarshaller210 with an improved wire protocol. Using a VersionAwareMarshaller helps achieve wire protocol compatibility between minor releases but still affords us the flexibility to tweak and improve the wire protocol between minor or micro releases.

This marshaller treats well-known objects that need marshalling - such as MethodCall , Fqn , DataVersion , and even some JDK objects such as String , List , Boolean and others as types that do not need complete class definitions. Instead, each of these well-known types are represented by a short , which is a lot more efficient.

In addition, reference counting is done to reduce duplication of writing certain objects multiple times, to help keep the streams small and efficient.

Also, if UseRegionBasedMarshalling is enabled (disabled by default) the marshaller adds region information to the stream before writing any data. This region information is in the form of a String representation of an Fqn . When unmarshalling, the RegionManager can be used to find the relevant Region , and use a region-specific ClassLoader to unmarshall the stream. This is specifically useful when used to cluster state for application servers, where each deployment has it's own ClassLoader . See the section below on regions for more information.

When used to cluster state of application servers, applications deployed in the application tend to put instances of objects specific to their application in the cache (or in an HttpSession object) which would require replication. It is common for application servers to assign separate ClassLoader instances to each application deployed, but have JBoss Cache libraries referenced by the application server's ClassLoader .

To enable us to successfully marshall and unmarshall objects from such class loaders, we use a concept called regions. A region is a portion of the cache which share a common class loader (a region also has other uses - see eviction policies ).

A region is created by using the Cache.getRegion(Fqn fqn, boolean createIfNotExists) method, and returns an implementation of the Region interface. Once a region is obtained, a class loader for the region can be set or unset, and the region can be activated/deactivated. By default, regions are active unless the InactiveOnStartup configuration attribute is set to true .

This chapter talks about aspects around clustering JBoss Cache.

JBoss Cache can be configured to be either local (standalone) or clustered. If in a cluster, the cache can be configured to replicate changes, or to invalidate changes. A detailed discussion on this follows.

Local caches don't join a cluster and don't communicate with other caches in a cluster. Therefore their elements don't need to be serializable - however, we recommend making them serializable, enabling a user to change the cache mode at any time. The dependency on the JGroups library is still there, although a JGroups channel is not started.

Replicated caches replicate all changes to some or all of the other cache instances in the cluster. Replication can either happen after each modification (no transactions), or at the end of a transaction (commit time).

Replication can be synchronous or asynchronous . Use of either one of the options is application dependent. Synchronous replication blocks the caller (e.g. on a put() ) until the modifications have been replicated successfully to all nodes in a cluster. Asynchronous replication performs replication in the background (the put() returns immediately). JBoss Cache also offers a replication queue, where modifications are replicated periodically (i.e. interval-based), or when the queue size exceeds a number of elements, or a combination thereof.

Asynchronous replication is faster (no caller blocking), because synchronous replication requires acknowledgments from all nodes in a cluster that they received and applied the modification successfully (round-trip time). However, when a synchronous replication returns successfully, the caller knows for sure that all modifications have been applied to all cache instances, whereas this is not be the case with asynchronous replication. With asynchronous replication, errors are simply written to a log. Even when using transactions, a transaction may succeed but replication may not succeed on all cache instances.

When using transactions, replication only occurs at the transaction boundary - i.e., when a transaction commits. This results in minimising replication traffic since a single modification is broadcast rather than a series of individual modifications, and can be a lot more efficient than not using transactions. Another effect of this is that if a transaction were to roll back, nothing is broadcast across a cluster.

Depending on whether you are running your cluster in asynchronous or synchronous mode, JBoss Cache will use either a single phase or two phase commit protocol, respectively.

Used when your cache mode is REPL_ASYNC. All modifications are replicated in a single call, which instructs remote caches to apply the changes to their local in-memory state and commit locally. Remote errors/rollbacks are never fed back to the originator of the transaction since the communication is asynchronous.

Used when your cache mode is REPL_SYNC. Upon committing your transaction, JBoss Cache broadcasts a prepare call, which carries all modifications relevant to the transaction. Remote caches then acquire local locks on their in-memory state and apply the modifications. Once all remote caches respond to the prepare call, the originator of the transaction broadcasts a commit. This instructs all remote caches to commit their data. If any of the caches fail to respond to the prepare phase, the originator broadcasts a rollback.

Note that although the prepare phase is synchronous, the commit and rollback phases are asynchronous. This is because Sun's JTA specification does not specify how transactional resources should deal with failures at this stage of a transaction; and other resources participating in the transaction may have indeterminate state anyway. As such, we do away with the overhead of synchronous communication for this phase of the transaction. That said, they can be forced to be synchronous using the SyncCommitPhase and SyncRollbackPhase configuration attributes.

Buddy Replication allows you to suppress replicating your data to all instances in a cluster. Instead, each instance picks one or more 'buddies' in the cluster, and only replicates to these specific buddies. This greatly helps scalability as there is no longer a memory and network traffic impact every time another instance is added to a cluster.

One of the most common use cases of Buddy Replication is when a replicated cache is used by a servlet container to store HTTP session data. One of the pre-requisites to buddy replication working well and being a real benefit is the use of session affinity , more casually known as sticky sessions in HTTP session replication speak. What this means is that if certain data is frequently accessed, it is desirable that this is always accessed on one instance rather than in a round-robin fashion as this helps the cache cluster optimise how it chooses buddies, where it stores data, and minimises replication traffic.

If this is not possible, Buddy Replication may prove to be more of an overhead than a benefit.

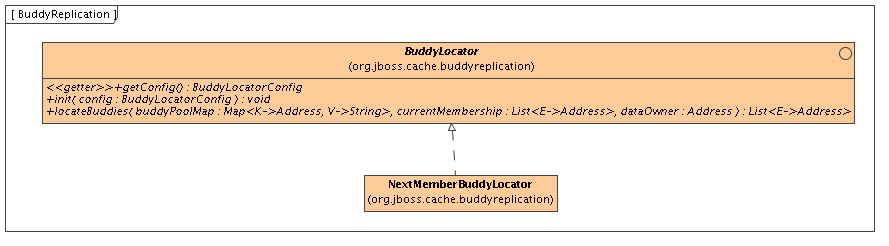

Buddy Replication uses an instance of a BuddyLocator which contains the logic used to select buddies in a network. JBoss Cache currently ships with a single implementation, NextMemberBuddyLocator , which is used as a default if no implementation is provided. The NextMemberBuddyLocator selects the next member in the cluster, as the name suggests, and guarantees an even spread of buddies for each instance.

The NextMemberBuddyLocator takes in 2 parameters, both optional.

- numBuddies - specifies how many buddies each instance should pick to back its data onto. This defaults to 1.

- ignoreColocatedBuddies - means that each instance will try to select a buddy on a different physical host. If not able to do so though, it will fall back to colocated instances. This defaults to true .

Also known as replication groups , a buddy pool is an optional construct where each instance in a cluster may be configured with a buddy pool name. Think of this as an 'exclusive club membership' where when selecting buddies, BuddyLocator s that support buddy pools would try and select buddies sharing the same buddy pool name. This allows system administrators a degree of flexibility and control over how buddies are selected. For example, a sysadmin may put two instances on two separate physical servers that may be on two separate physical racks in the same buddy pool. So rather than picking an instance on a different host on the same rack, BuddyLocator s would rather pick the instance in the same buddy pool, on a separate rack which may add a degree of redundancy.

In the unfortunate event of an instance crashing, it is assumed that the client connecting to the cache (directly or indirectly, via some other service such as HTTP session replication) is able to redirect the request to any other random cache instance in the cluster. This is where a concept of Data Gravitation comes in.

Data Gravitation is a concept where if a request is made on a cache in the cluster and the cache does not contain this information, it asks other instances in the cluster for the data. In other words, data is lazily transferred, migrating only when other nodes ask for it. This strategy prevents a network storm effect where lots of data is pushed around healthy nodes because only one (or a few) of them die.

If the data is not found in the primary section of some node, it would (optionally) ask other instances to check in the backup data they store for other caches. This means that even if a cache containing your session dies, other instances will still be able to access this data by asking the cluster to search through their backups for this data.

Once located, this data is transferred to the instance which requested it and is added to this instance's data tree. The data is then (optionally) removed from all other instances (and backups) so that if session affinity is used, the affinity should now be to this new cache instance which has just taken ownership of this data.

Data Gravitation is implemented as an interceptor. The following (all optional) configuration properties pertain to data gravitation.

- dataGravitationRemoveOnFind - forces all remote caches that own the data or hold backups for the data to remove that data, thereby making the requesting cache the new data owner. This removal, of course, only happens after the new owner finishes replicating data to its buddy. If set to false an evict is broadcast instead of a remove, so any state persisted in cache loaders will remain. This is useful if you have a shared cache loader configured. Defaults to true .

- dataGravitationSearchBackupTrees - Asks remote instances to search through their backups as well as main data trees. Defaults to true . The resulting effect is that if this is true then backup nodes can respond to data gravitation requests in addition to data owners.

- autoDataGravitation - Whether data gravitation occurs for every cache miss. By default this is set to false to prevent unnecessary network calls. Most use cases will know when it may need to gravitate data and will pass in an Option to enable data gravitation on a per-invocation basis. If autoDataGravitation is true this Option is unnecessary.

<!-- Buddy Replication config -->

<attribute name="BuddyReplicationConfig">

<config>

<!-- Enables buddy replication. This is the ONLY mandatory configuration element here. -->

<buddyReplicationEnabled>true</buddyReplicationEnabled>

<!-- These are the default values anyway -->

<buddyLocatorClass>org.jboss.cache.buddyreplication.NextMemberBuddyLocator</buddyLocatorClass>

<!-- numBuddies is the number of backup nodes each node maintains. ignoreColocatedBuddies means

that each node will *try* to select a buddy on a different physical host. If not able to do so though,

it will fall back to colocated nodes. -->